A new feature on THN.com looks at every team's chances of making the playoffs, updated every day. Here's how we determine the odds.

A new feature on THN.com looks at every team's chances of making the playoffs, updated every day. Here's how we determine the odds.

New feature alert at THN.com: Playoff chances, updated every morning, and just in time for the stretch drive. You may have noticed these referenced in our monthly trend reports or perhaps from following me on Twitter, but they now have a new location: here.

In a sport as chaotic and parity driven as hockey, the race to the playoffs is always entertaining. It feels like everyone has a chance, even if they realistically don’t and as the season moves forward, things become more certain and teams slowly fall out of the race. As the gruelling 82 game schedule comes to end it becomes more and more exciting to be a hockey fan. Knowing just how likely events are to transpire only increases that effect.

You may roll your eyes at that, but we all do it implicitly in our heads. When your favourite team is down by two in the third, in your head you know a comeback is unlikely, but you hold out hope because you’ve seen it done before. When that deficit turns to three or four goals, that hope fades away.

Last week’s Super Bowl is a great example, as it felt like the game was over when Atlanta went up 28-3. In our heads, we knew it would take some sort of miracle for the Patriots to win the game, and when they did, we understood the magnitude of such a comeback based on the implicit unlikelihood of it happening in our head. How great a comeback is depends on how much you couldn’t believe it just happened, and that belief comes from the mental arithmetic we do in deciding how unlikely it should’ve been to see what just occurred.

A statistical model is basically that, only it’s an actual number instead of a feeling. Rather than thinking “the Patriots are toast” it’s “the Patriots chances of winning are pretty slim now at one percent.” Knowing the Patriots were, for once, deeply against the odds made the comeback that much more exhilarating. That’s the goal with these playoff probabilities: not only to inform and analyze, but to give context to the playoff race from a fan perspective.

And I know there’s a lot of fans out there that pay attention to things like playoff chances as they’re often used as talking points for the exact reasons listed above. The most popular one for a while has been SportsClubStats.com, but their method uses a team’s current record which tends to not be very predictive (see Maple Leafs, Toronto: 2011-12, 2013-14). It also omits trades, and injuries which can make for pretty big swings.

There’s also HockeyViz.com which is a much better alternative as it uses metrics known to be more predictive (detailed here) and does it at the player level to account for trades and injuries. Finally, there’s two others that use team-level expected goals from @DTMAboutHeart (who tweets them regularly) and moneypuck.com.

It’s a crowded field and figuring out which numbers to trust (the three just mentioned are all trustworthy alternatives, for what it’s worth) depends on how much you trust the methodology.

Here’s how we’re doing things.

Methodology

Like the model over at HockeyViz.com, this one is player based, which should be an asset come trade deadline time. Doing things at the player level for this sort of thing is the way to go, because teams are rarely playing healthy and lineups are very different after the deadline. Using team numbers works fine, but you’ll get cases like the Tampa Bay Lightning losing Steven Stamkos to injury for most of the season, or the Florida Panthers getting Aleksander Barkov and Jonathan Hubderdeau back on the same night after playing most of the season injured. The former will have inflated numbers, while the latter will have the opposite and their chances won’t reflect the team’s current strength.

Measuring player strength in a sport as dynamic as hockey is extremely tricky and nothing will ever be perfect. This model uses Game Score, a stat that weighs a variety of important box-score stats in their relation to goals, and I’ve found it does a decent job of roughly measuring player value. Again, not perfect, but good enough, easy to understand, and the results line up pretty well with most common conceptions about player value -- usually.

But one season isn’t really enough information to know how good a player is or will be. Last year when Sidney Crosby had 19 points in his first 28 games, none of us believed he was suddenly a 55 point scorer, because we had prior knowledge of him being a reliable 90 point player. While his expectation would get a bump down from a slump that drastic, it shouldn’t have been to the point where anyone thinks 55 is reasonable. That’s why, for the purposes of forecasting, this model uses the last three seasons of Game Score, weighted by recency.

That means this season is the most important, last season is slightly less important, and the season before that is the least important. To figure out how much each season should matter, I put each individual component of Game Score (separated by position) into a multiple linear regression (think line of best fit from math class, except there’s more than just one) that determined not only how much to weight each season, but also how much the final number should be regressed to the mean. The more repeatable a stat is, the less it needs to be regressed. What that basically means is you take that final output and you add a small chunk of average to it to bring everyone a little closer together, depending on how confident you are in the result.

How much that chunk of average effects a skater depends on how large his sample size is, so a three year veteran playing big minutes won’t see a huge dent in his projections. It all depends on certainty and if we’ve seen a guy do the same thing three years in a row, we can be reasonably confident it can continue. New data updates what we think, but the prevailing wisdom of the past few years isn’t forgotten. If a guy is extra hot or extra cold, it doesn’t drastically change what we think of him until he sustains it.

It’s much more different for guys who have played a lot fewer minutes, like rookies. Because there’s less certainty, their projections will be pushed much closer to average until we have a better read on what they’re capable of.

While there’s not much certainty in rookie results yet, based on age alone, it’s likely they improve as they gets closer to their peak which is why along with regressing each player’s results, their future projection also gets adjusted for his age. Players between 18 and 26 get pushed up, while older players get pushed down.

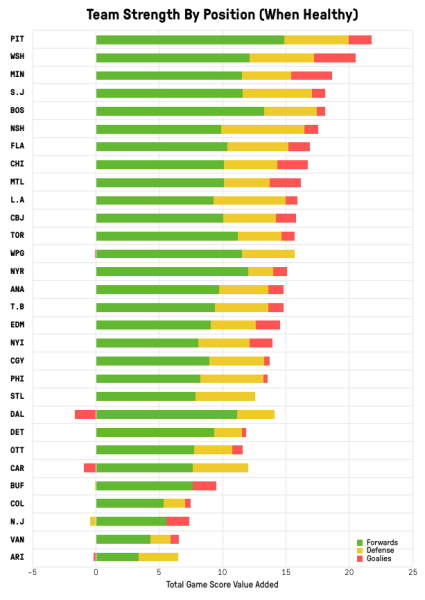

After getting their projected Game Score, the number is translated to above replacement (how much better the player is over the 360th-ranked forward, 180th defenseman, and 60th goalie on a per minute basis) and then converted to a win total, simply dubbed Game Score Value Added (GSVA) if you remember our pre-season projections. Figuring out how strong each team is after that is pretty easy: just add all the wins together. (And if you want to see how strong the model believes each player is, you can go here).

While it’s not perfect, and there’ll be some concerns about where certain teams are (Boston and Winnipeg – who are probably the most controversial – are carried by incredible top end talent that drown out inferior depth), I think for the most part it’s a fair ranking of strength. This is trying to predict how good teams will be in the future, not measure what they’ve already done, so keep that in mind.

Once that’s put together, the teams have to face off over the rest of their schedule. A probability (using Bill James’ Log5 formula) is placed on which team is more likely to win each and every game based on who’s stronger, which team is at home, and how rested they are.

In terms of accuracy, the model has “called” the winner for each game this season just over 59 percent of the time (which is pretty good considering the upper limit for predicting hockey is around 62 percent although it’s still early) and has seen a decent return on investment against betting lines.

After getting individual game probabilities, it’s time to “play” the rest of the season. But sports are inherently random, so playing it through just once won’t tell you much, there’s so many different ways things can change. Instead, the season is played out 10,000 times and the average result of what happened is essentially the most likely scenario. From those simulations, you can count how many times a team made the playoffs or didn’t, and how many points they got on average, which is what we’re showing here.

That’s pretty much the gist of how this thing works and it’ll be interesting to look back on at the end of the season to see what was “right” or “wrong.” Just remember though that’s not what we’re measuring here. This is about likelihoods, and if something unlikely ends up happening, it doesn’t mean the model was necessarily wrong, it just what happened that much more impressive.